A few days ago I mentioned that I had started doing some static file performance runs on a handful of web servers. Here are the results!

Please refer to the previous article on setup details for info on exactly what and how I’m testing this time. Benchmark results apply to the narrow use case being tested and this one is no different.

The results are ordered from slowest to fastest peak numbers produced by each server.

9. Monkey (0.9.3-1 debian package)

The monkey server was not able to complete the runs so it is not included in the graphs. At a concurrency of 1, it finished the 30 minute run with an average of only 133 requests/second, far slower than any of the others. With only two concurrent clients it started erroring out on some requests so I stopped testing it. Looks like this one is not ready for prime time yet.

8. G-WAN (3.3.28)

I had seen some of the performance claims around G-WAN so decided to try it, even though it is not open source. All the tests I’d seen of it had been done running on localhost so I was curious to see how it behaves under a slightly more realistic type of load. Turns out, not too well. Aside from monkey, it was the slowest of the group.

7. Apache HTTPD, Event MPM (2.2.16-6+squeeze8 debian package)

I was surprised to see the event MPM do so badly. To be fair, it should do better against a benchmark which has large numbers of mostly idle clients which is not what this particular benchmark tested. At most points in the test it was also the highest consumer of CPU.

6. lighttpd (1.4.28-2+squeeze1 debian package)

Here we get to the first of the serious players (for this test scenario). lighttpd starts out strong up to 3 concurrent clients. After that it stops scaling up so it loses some ground in the final results. Also, lighttpd is the lightest user of CPU of the

group.

5. nginx (0.7.67-3+squeeze2 debian package)

The nginx throughput curve is just about identical to lighttpd, just shifted slightly higher. The CPU consumption curve is also almost identical. These two are twins separated at birth. While nginx uses a tiny bit more CPU than lighttpd, it makes up for it with higher throughput.

4. cherokee (1.0.8-5+squeeze1 debian package)

Cherokee just barely edges out nginx at the higher concurrencies tested so it ends up fourth. To be fair, nginx was faster than cherokee at most of the lower concurrencies though. Note, however, that cherokee uses quite a bit more CPU to deliver its numbers so it is not as efficient as nginx.

3. Apache HTTPD, Worker MPM (2.2.16-6+squeeze8 debian package)

Apache third, really? Yes but only because this ranking is based on the peak numbers of each server. With worker mpm, apache starts out quite a bit behind lighttpd/nginx/cherokee at lower client concurrencies. However, as those others start to stall as concurrency increases, apache keeps going higher. Around five concurrent clients it catches up to lighttpd and around eight clients it catches up to nginx and cherokee. At ten

it scores a throughput just slightly above those two, securing third place in this test. Looking at CPU usage tough, at that point it has just about maxed out the CPU (about 1% idle) making it the highest CPU consumer of this group so it is not very efficient.

2. varnish (2.1.3-8 debian package)

Varnish is not really a web server, of course, so in that sense it is out of place in this test. But it can serve (cached) static files and has been included in other similar performance tests so I decided to include it here.

Varnish throughput starts out quite a bit slower than nginx, right on par with lighttpd and cherokee and lower concurrencies. However, varnish scales up beautifully. Unlike all the previous servers, its throughput curve does not flatten out as concurrency increases in this

test, it keeps going higher. Around four concurrent users it surpasses nginx and only keeps going higher all the way to ten.

Varnish was able to push network utilization to 90-94%. The only drawback is that delivering its performance does use up a lot of CPU… only Apache used more CPU than varnish in this test. At ten clients, there is only 9% idle CPU left.

1. heliod (0.2)

heliod had the highest throughput at every point tested in these runs. It is slightly faster than nginx at sequential requests (one client) and then pulls away.

heliod is also quite efficient in CPU consumption. Up to four concurrent clients it is the lightest user of CPU cycles even though it produced higher throughput than all the others. At higher concurrencies, it used slightly more CPU than nginx/lighttpd although it makes up for it with far higher throughput.

heliod was also the only server able to saturate the gigabit connection (at over 97% utilization). Given that there is 62% idle CPU left at that point, I suspect if I had more bandwidth heliod might be able to score even higher on this machine.

These results should not be much of a surprise… after all heliod is not new, it is the same code that has been setting benchmark records for over ten years (it just wasn’t open source back then). Fast then, still fast today.

If you are running one of these web servers and using varnish to accelerate it, you could switch to heliod by itself and both simplify your setup and gain performance at the same time. Food for thought!

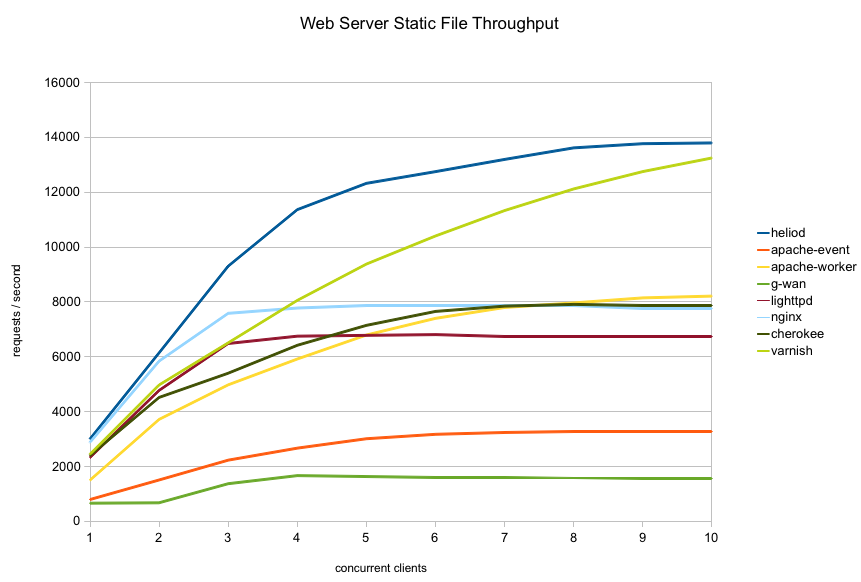

All right, let’s see some graphs..

First, here is the overall throughput graph for all the servers tested:

As you can see the servers fall into three groups in terms of throughput:

- apache-event and g-wan are not competitive in this crowd

- apache-worker/nginx/lighttpd/cherokee are quite similar in the middle

- varnish and heliod are in a class of their own at the high end

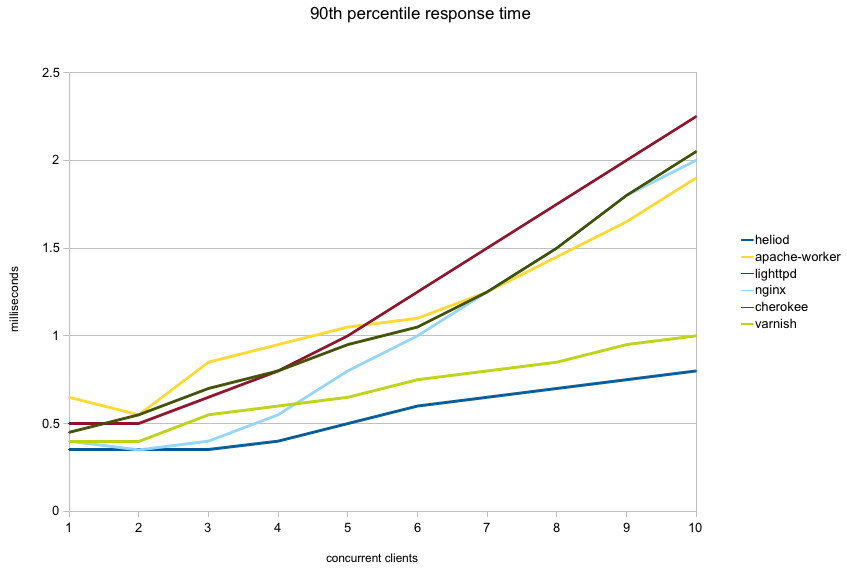

Next graph shows the 90th percentile response time for each server. That is, 90 percent of all requests completed in this time or less. I left out apache-event and g-wan from the graph to avoid compressing the more interesting part of the graph:

The next graph shows CPU idle time (percent) for each server through the run. The spikes to 100% between each step are due to the short idle interval between each run as faban starts the next run.

The two apache variants (red and orange) are the only ones who maxed out the CPU. Varnish (light green) also uses quite a bit of CPU and comes close (9% idle). On the other side, lighttpd (dark red) and nginx (light blue) put the least load on the CPU with about 72% idle.

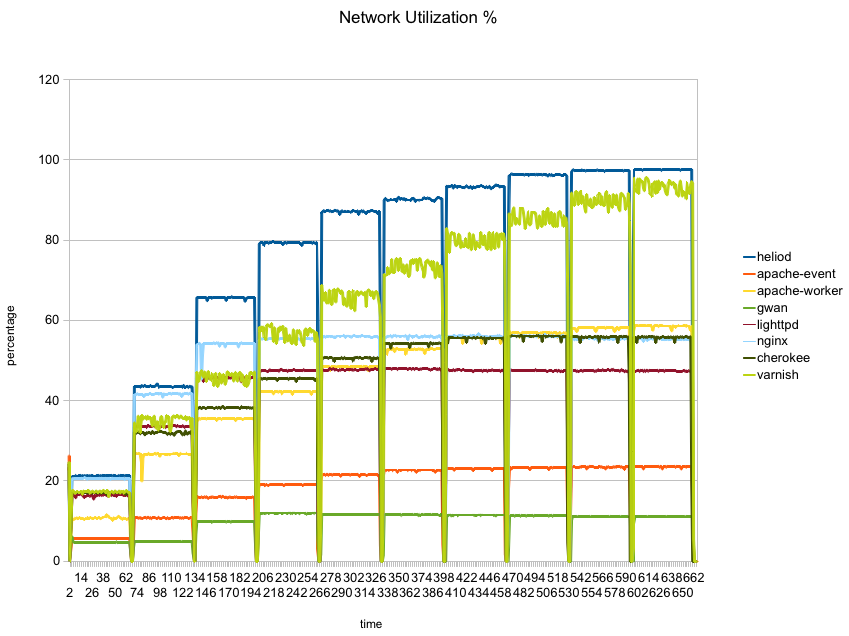

Finally, the next graph shows network utilization percentage of the gigabit interface:

Here heliod (blue) is the only one which manages to saturate the network, with varnish coming in quite close. None of the others manage to reach even 60% utilization.

So there you have it… heliod can sustain far higher throughput than any of the popular web servers in this static file test and it can do so efficiently, saturating the network on a low power two core machine while leaving plenty of CPU idle. It even manages to sustain higher throughput than varnish which specializes in caching static content efficiently and is not a full featured web server.

Of course, all benchmarks are by necessity artificial. If any of the variables change the numbers will change and the rankings may change. These results are representative of the exact use case and setup I tested, not necessarily of any other. Again, for details on what and how I tested, see my previous article.

I hope to test other scenarios in the future. I’d love to also test on a faster CPU with lots of cores, unfortunately I don’t own such hardware so it is unlikely to happen.

Finally, I set up a github repository fabhttp which contains:

- source code of the faban driver used to run these tests

- dstat/nicstat data collected during the runs (used to generate the graphs above)

- additional graphs generated by faban for every individual run

Even though this page is listed as less than a month old, most of the versions of webserver here are extremely old. For example nginx is currently at 1.2.5 stable, and 1.3.8 development, 0.7.x is ANCIENT.

Check the previous article for details on the environment conditions of these runs. The server versions are those available on Debian 6.0.6 (latest release). Distribution package versions are inevitably always older than where each project currently stands. Packaged versions are also the most commonly used, as most users do not compile their servers manually.

So, this “performance” test was done with…

– less than *10 concurrent* clients (what about a test under real load)?

– a *java* client test tool (you test the JVM, not the C epoll-based servers)

– a tiny dual-Core *Intel Atom* D510 CPU (passmark score: 659)

– Linux 32-bit (while Linux 64-bit is so much faster for the network)

Are you serious?

Of course, no: you cheated with the results as G-WAN was tested on a similar CPU at the much higher rate of : 35,000 Requests per second.

Here are the CPU ratings:

====================

http://www.cpubenchmark.net/cpu.php?cpu=Intel+Atom+D510+%40+1.66GHz

http://www.cpubenchmark.net/cpu.php?cpu=Intel+Atom+N570+%40+1.66GHz

Here is the chart:

=============

http://gwan.ch/imgs/gwan_intel_atom.png

Here are the benchmark results:

===============================================================================

G-WAN ab.c ApacheBench wrapper, http://gwan.ch/source/ab.c.txt

——————————————————————————-

Machine: 1 x 2-Core CPU(s) Intel(R) Atom(TM) CPU N570 @ 1.66GHz

RAM: 2.72/3.74 (Free/Total, in GB)

Linux x86_64 v#1 SMP Fri May 20 03:51:51 BST 2011 2.6.32-71.el6.x86_64

CentOS Linux release 6.0 (Final)

weighttp -n 1000000 -c [0-1000 step:10] -t 2 -k “http://127.0.0.1:8080/100.html”

Client Requests per second CPU

———– ——————————- —————- ——-

Concurrency min ave max user kernel MB RAM

———– ——— ——— ——— ——- ——- ——-

1, 15440, 16831, 19165,

10, 25508, 32133, 35316,

20, 29475, 34087, 35017,

30, 27282, 33330, 34706,

40, 24518, 33530, 35280,

50, 28097, 33285, 34430,

60, 26667, 33370, 34663,

70, 33103, 33513, 34097,

80, 32054, 33638, 34100,

90, 31936, 33410, 33916,

100, 32649, 33345, 34008,

110, 31607, 33268, 33989,

120, 28886, 32821, 33836,

130, 31186, 33346, 34368,

140, 27859, 33007, 34131,

150, 32907, 33415, 34203,

160, 31461, 33144, 34047,

170, 28658, 32204, 34037,

180, 32119, 33538, 34217,

190, 31426, 33437, 34356,

200, 32824, 33674, 34276,

210, 33044, 33822, 34317,

220, 32481, 33559, 34262,

230, 33668, 33937, 34196,

240, 31842, 33796, 34670,

250, 33746, 34084, 34378,

260, 32446, 33921, 34413,

270, 31275, 33710, 34781,

280, 32776, 33810, 34341,

290, 31555, 33935, 34925,

300, 33058, 33918, 34294,

310, 32751, 33765, 34230,

320, 32123, 33660, 34347,

330, 32454, 33952, 34441,

340, 31437, 33835, 34411,

350, 32110, 33415, 34301,

360, 33016, 34089, 34362,

370, 32270, 33833, 34441,

380, 32667, 33484, 34323,

390, 33170, 33846, 34387,

400, 33928, 34208, 34402,

410, 33289, 33998, 34326,

420, 32834, 34042, 34354,

430, 32748, 33930, 34270,

440, 33638, 34128, 34418,

450, 33235, 34061, 34473,

460, 33524, 34085, 34454,

470, 33478, 34115, 34437,

480, 32858, 33907, 34392,

490, 33653, 34087, 34368,

500, 32364, 33706, 34500,

510, 33493, 33930, 34256,

520, 33462, 33978, 34389,

530, 33018, 33954, 34388,

540, 32977, 33921, 34270,

550, 33390, 33971, 34361,

560, 33934, 34166, 34349,

570, 32653, 33937, 34353,

580, 32491, 33783, 34394,

590, 32849, 33878, 34318,

600, 32798, 33858, 34333,

610, 33808, 34106, 34316,

620, 32608, 33920, 34349,

630, 33696, 34026, 34307,

640, 33567, 34036, 34329,

650, 33953, 34168, 34331,

660, 32656, 33788, 34245,

670, 32715, 33853, 34275,

680, 33707, 34052, 34282,

690, 32835, 33921, 34183,

700, 33617, 33904, 34326,

710, 33794, 34045, 34288,

720, 33756, 34050, 34237,

730, 32575, 33951, 34254,

740, 32622, 33693, 34153,

750, 33667, 33954, 34132,

760, 33590, 33965, 34214,

770, 33639, 34023, 34228,

780, 33464, 34061, 34215,

790, 32618, 33837, 34226,

800, 33417, 34004, 34151,

810, 32583, 33804, 34233,

820, 32666, 33866, 34177,

830, 33754, 34023, 34266,

840, 33583, 33912, 34248,

850, 32834, 33903, 34238,

860, 33759, 34060, 34224,

870, 33663, 33993, 34252,

880, 21612, 32772, 34267,

890, 33642, 33952, 34173,

900, 32595, 33936, 34187,

910, 33774, 34035, 34193,

920, 33972, 34107, 34263,

930, 32585, 33833, 34216,

940, 32673, 33884, 34240,

950, 33808, 34115, 34287,

960, 33723, 34007, 34257,

970, 32548, 33770, 34163,

980, 33979, 34120, 34188,

990, 33829, 34083, 34187,

1000, 33631, 33949, 34112,

——————————————————————————-

min:3251682 avg:3395821 max:3451195 Time:30231 second(s) [08:23:51]

——————————————————————————-

Your numbers are different because you are measuring something different (a single 100 byte file on localhost). Comparing results from very different benchmarks is meaningless.

All the data and code you need to reproduce these runs is available on the github repository noted in the article, so you are free to run it on your own hardware and report results.

As to “cheating”… LOL! Just because G-WAN did very poorly in this test does not mean the result are not correct. All servers were tested under precisely the same conditions. Perhaps G-WAN will do better in a different scenario.